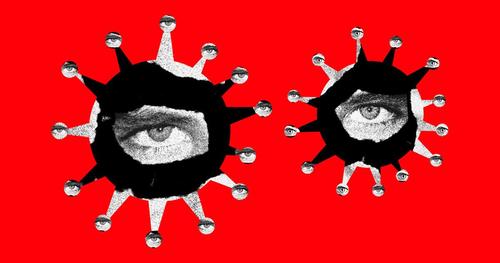

Leaked Docs Reveal How China's 'Army Of Paid Internet Trolls' Helped Censor COVID-19

25 December, 2020

Authored by Raymond Zhong, Paul Mozur and Aaron Krolik, The New York Times, and Jeff Kao via ProPublica (emphasis ours)

In the early hours of Feb. 7, China’s powerful internet censors experienced an unfamiliar and deeply unsettling sensation. They felt they were losing control.

The news was spreading quickly that Li Wenliang, a doctor who had warned about a strange new viral outbreak only to be threatened by the police and accused of peddling rumors, had died of COVID-19. Grief and fury coursed through social media. To people at home and abroad, Li’s death showed the terrible cost of the Chinese government’s instinct to suppress inconvenient information.

Yet China’s censors decided to double down. Warning of the “unprecedented challenge” Li’s passing had posed and the “butterfly effect” it may have set off, officials got to work suppressing the inconvenient news and reclaiming the narrative, according to confidential directives sent to local propaganda workers and news outlets.

They ordered news websites not to issue push notifications alerting readers to his death. They told social platforms to gradually remove his name from trending topics pages. And they activated legions of fake online commenters to flood social sites with distracting chatter, stressing the need for discretion: “As commenters fight to guide public opinion, they must conceal their identity, avoid crude patriotism and sarcastic praise, and be sleek and silent in achieving results.”

Special instructions were issued to manage anger over Dr. Li’s death.

The orders were among thousands of secret government directives and other documents that were reviewed by The New York Times and ProPublica. They lay bare in extraordinary detail the systems that helped the Chinese authorities shape online opinion during the pandemic.

At a time when digital media is deepening social divides in Western democracies, China is manipulating online discourse to enforce the Communist Party’s consensus. To stage-manage what appeared on the Chinese internet early this year, the authorities issued strict commands on the content and tone of news coverage, directed paid trolls to inundate social media with party-line blather and deployed security forces to muzzle unsanctioned voices.

It is much more than simply flipping a switch to block certain unwelcome ideas, images or pieces of news.

China’s curbs on information about the outbreak started in early January, before the novel coronavirus had even been identified definitively, the documents show. When infections started spreading rapidly a few weeks later, the authorities clamped down on anything that cast China’s response in too “negative” a light.

The United States and other countries have for months accused China of trying to hide the extent of the outbreak in its early stages. It may never be clear whether a freer flow of information from China would have prevented the outbreak from morphing into a raging global health calamity. But the documents indicate that Chinese officials tried to steer the narrative not only to prevent panic and debunk damaging falsehoods domestically. They also wanted to make the virus look less severe — and the authorities more capable — as the rest of the world was watching.

The documents include more than 3,200 directives and 1,800 memos and other files from the offices of the country’s internet regulator, the Cyberspace Administration of China, in the eastern city of Hangzhou. They also include internal files and computer code from a Chinese company, Urun Big Data Services, that makes software used by local governments to monitor internet discussion and manage armies of online commenters.

The documents were shared with The Times and ProPublica by a hacker group that calls itself CCP Unmasked, referring to the Chinese Communist Party. The Times and ProPublica independently verified the authenticity of many of the documents, some of which had been obtained separately by China Digital Times, a website that tracks Chinese internet controls.

The CAC and Urun did not respond to requests for comment.

“China has a politically weaponized system of censorship; it is refined, organized, coordinated and supported by the state’s resources,” said Xiao Qiang, a research scientist at the School of Information at the University of California, Berkeley, and the founder of China Digital Times. “It’s not just for deleting something. They also have a powerful apparatus to construct a narrative and aim it at any target with huge scale.”

“This is a huge thing,” he added. “No other country has that.”

Controlling a Narrative

China’s top leader, Xi Jinping, created the Cyberspace Administration of China in 2014 to centralize the management of internet censorship and propaganda as well as other aspects of digital policy. Today, the agency reports to the Communist Party’s powerful Central Committee, a sign of its importance to the leadership.

The CAC’s coronavirus controls began in the first week of January. An agency directive ordered news websites to use only government-published material and not to draw any parallels with the deadly SARS outbreak in China and elsewhere that began in 2002, even as the World Health Organization was noting the similarities.

At the start of February, a high-level meeting led by Xi called for tighter management of digital media, and the CAC’s offices across the country swung into action. A directive in Zhejiang Province, whose capital is Hangzhou, said the agency should not only control the message within China, but also seek to “actively influence international opinion.”

Agency workers began receiving links to virus-related articles that they were to promote on local news aggregators and social media. Directives specified which links should be featured on news sites’ home screens, how many hours they should remain online and even which headlines should appear in boldface.

Online reports should play up the heroic efforts by local medical workers dispatched to Wuhan, the Chinese city where the virus was first reported, as well as the vital contributions of Communist Party members, the agency’s orders said.

Headlines should steer clear of the words “incurable” and “fatal,” one directive said, “to avoid causing societal panic.” When covering restrictions on movement and travel, the word “lockdown” should not be used, said another. Multiple directives emphasized that “negative” news about the virus was not to be promoted.

When a prison officer in Zhejiang who lied about his travels caused an outbreak among the inmates, the CAC asked local offices to monitor the case closely because it “could easily attract attention from overseas.”

Officials ordered the news media to downplay the crisis.

News outlets were told not to play up reports on donations and purchases of medical supplies from abroad. The concern, according to agency directives, was that such reports could cause a backlash overseas and disrupt China’s procurement efforts, which were pulling in vast amounts of personal protective equipment as the virus spread abroad.

“Avoid giving the false impression that our fight against the epidemic relies on foreign donations,” one directive said.

CAC workers flagged some on-the-ground videos for purging, including several that appear to show bodies exposed in public places. Other clips that were flagged appear to show people yelling angrily inside a hospital, workers hauling a corpse out of an apartment and a quarantined child crying for her mother. The videos’ authenticity could not be confirmed.

The agency asked local branches to craft ideas for “fun at home” content to “ease the anxieties of web users.” In one Hangzhou district, workers described a “witty and humorous” guitar ditty they had promoted. It went, “I never thought it would be true to say: To support your country, just sleep all day.”

Then came a bigger test.

"Severe Crackdown"

The death of Li, the doctor in Wuhan, loosed a geyser of emotion that threatened to tear Chinese social media out from under the CAC’s control.

It did not help when the agency’s gag order leaked onto Weibo, a popular Twitter-like platform, fueling further anger. Thousands of people flooded Li’s Weibo account with comments.

The agency had little choice but to permit expressions of grief, though only to a point. If anyone was sensationalizing the story to generate online traffic, their account should be dealt with “severely,” one directive said.

The day after Li’s death, a directive included a sample of material that was deemed to be “taking advantage of this incident to stir up public opinion”: a video interview in which Li’s mother reminisces tearfully about her son.

The scrutiny did not let up in the days that followed. “Pay particular attention to posts with pictures of candles, people wearing masks, an entirely black image or other efforts to escalate or hype the incident,” read an agency directive to local offices.

Larger numbers of online memorials began to disappear. The police detained several people who formed groups to archive deleted posts.

In Hangzhou, propaganda workers on round-the-clock shifts wrote up reports describing how they were ensuring people saw nothing that contradicted the soothing message from the Communist Party: that it had the virus firmly under control.

Officials in one district reported that workers in their employ had posted online comments that were read more than 40,000 times, “effectively eliminating city residents’ panic.” Workers in another county boasted of their “severe crackdown” on what they called rumors: 16 people had been investigated by the police, 14 given warnings and two detained. One district said it had 1,500 “cybersoldiers” monitoring closed chat groups on WeChat, the popular social app.

Researchers have estimated that hundreds of thousands of people in China work part-time to post comments and share content that reinforces state ideology. Many of them are low-level employees at government departments and party organizations. Universities have recruited students and teachers for the task. Local governments have held training sessions for them.

Local officials turned to informants and trolls to control opinion.

Engineers of the Troll

Government departments in China have a variety of specialized software at their disposal to shape what the public sees online.

One maker of such software, Urun, has won at least two dozen contracts with local agencies and state-owned enterprises since 2016, government procurement records show. According to an analysis of computer code and documents from Urun, the company’s products can track online trends, coordinate censorship activity and manage fake social media accounts for posting comments.

One Urun software system gives government workers a slick, easy-to-use interface for quickly adding likes to posts. Managers can use the system to assign specific tasks to commenters. The software can also track how many tasks a commenter has completed and how much that person should be paid.

According to one document describing the software, commenters in the southern city of Guangzhou are paid $25 for an original post of longer than 400 characters. Flagging a negative comment for deletion earns them 40 cents. Reposts are worth one cent apiece.

Urun makes a smartphone app that streamlines their work. They receive tasks within the app, post the requisite comments from their personal social media accounts, then upload a screenshot, ostensibly to certify that the task was completed.

The company also makes video game-like software that helps train commenters, documents show. The software splits a group of users into two teams, one red and one blue, and pits them against each other to see which can produce more popular posts.

Other Urun code is designed to monitor Chinese social media for “harmful information.” Workers can use keywords to find posts that mention sensitive topics, such as “incidents involving leadership” or “national political affairs.” They can also manually tag posts for further review.

In Hangzhou, officials appear to have used Urun software to scan the Chinese internet for keywords like “virus” and “pneumonia” in conjunction with place names, according to company data.

A Great Sea of Placidity

By the end of February, the emotional wallop of Li’s death seemed to be fading. CAC workers around Hangzhou continued to scan the internet for anything that might perturb the great sea of placidity.

One city district noted that web users were worried about how their neighborhoods were handling the trash left by people who were returning from out of town and potentially carrying the virus. Another district observed concerns about whether schools were taking adequate safety measures as students returned.

On March 12, the agency’s Hangzhou office issued a memo to all branches about new national rules for internet platforms. Local offices should set up special teams for conducting daily inspections of local websites, the memo said. Those found to have violations should be “promptly supervised and rectified.”

The Hangzhou CAC had already been keeping a quarterly scorecard for evaluating how well local platforms were managing their content. Each site started the quarter with 100 points. Points were deducted for failing to adequately police posts or comments. Points might also be added for standout performances.

In the first quarter of 2020, two local websites lost 10 points each for “publishing illegal information related to the epidemic,” that quarter’s score report said. A government portal received an extra two points for “participating actively in opinion guidance” during the outbreak.

Over time, the CAC offices’ reports returned to monitoring topics unrelated to the virus: noisy construction projects keeping people awake at night, heavy rains causing flooding in a train station.

Then, in late May, the offices received startling news: Confidential public-opinion analysis reports had somehow been published online. The agency ordered offices to purge internal reports — particularly, it said, those analyzing sentiment surrounding the epidemic.

The offices wrote back in their usual dry bureaucratese, vowing to “prevent such data from leaking out on the internet and causing a serious adverse impact to society.”

No comments:

Post a Comment

Note: only a member of this blog may post a comment.