Dorsey Admits To Mob-Driven Censorship On Twitter During Heated Section 230 Hearing

28 October, 2020

Update (1412 ET): It's clear that Twitter's Jack Dorsey has perhaps unintentionally acknowledged that Twitter's unofficial moderating system is based simply on whoever shows the most outrage.

In response to questioning from Sen. Rick Scott, Dorsey replied:

“We don’t have a general policy around misleading information and misinformation... We rely upon people calling that speech out.”

See the discussion below...

Which seems to be an admission of mob-rule...

In summary, Twitter is effectively letting a small group of politically-motivated users dictate what is truth and what is fake news.

...sounds like a pretty fair system to us.

* * *

Update (1357 ET): Senator Rick Scott shared a series of tweets from dictators in Iran, Venezuela, and one from the Chinese government, arguing that Twitter has allowed dangerous dictators to voice their opinions but, at the same time, unfairly target conservatives.

Dorsey, in response to Scott, said: "We don't have a general policy around misleading information and misinformation... We rely upon people calling that speech out."

* * *

Update (1325 ET): Senator Tammy Duckworth asks the CEOs of all three tech companies (Twitter, Facebook, and Google) for a personal commitment that their respective companies "will counter domestic disinformation that spreads the dangerous lies such as "masks don't work..."

... all three tech CEOs overwhelmingly said "Yes."

* * *

Update (1245 ET): President Trump, who must be listening to the Section 230 hearing, just tweeted: "Media and Big Tech are not covering Biden Corruption!"

Trump also tweeted, "the USA doesn't have Freedom of the Press, we have Suppression of the Story, or just plain Fake News. So much has been learned in the last two weeks about how corrupt our Media is, and now Big Tech, maybe even worse. Repeal Section 230!"

We noted earlier, Trump on Oct. 6, tweeted for an outright repeal of the law, using three exclamation points to emphasize his concern about the federal statute protecting social media companies as they unfairly censor conservatives.

* * *

Update (1235 ET): During the Section 230 hearing, Senator Ed Markey asked Facebook CEO Mark Zuckerberg if he would commit that Facebook's algorithms "don't spread" violence after the election.

Zuckerberg responded by saying any calls for violence are in violation of Facebook's policies and "there are no exceptions" for political leaders.

* * *

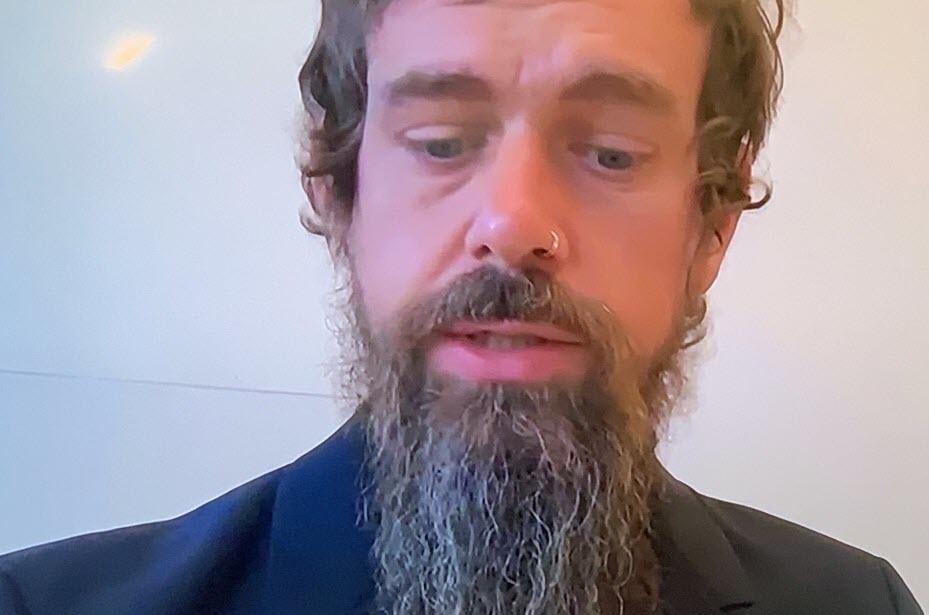

Update (1150 ET): As Senate Democrats and Republicans grill the CEOs of Facebook, Google, and Twitter, Senator Ted Cruz said the three tech companies pose the "single greatest threat to free speech in America and the greatest threat we have to free and fair elections."

Cruz asked Dorsey if he has the ability to influence elections, to which Dorsey replied "no"... an answer which Cruz slammed as "absurd."

Dorsey - very calm - responds to Cruz: "We realize we need to earn trust more. We realize more accountability is needed."

Toward the end of the back and forth, Cruz laid into Dorsey: "Who the hell elected you and put you in charge of what the media are allowed to report and what the American people are allowed to hear?"

Though Dorsey kept his cool, Cruz's aggressive rebuke certainly rattled the tech CEO.

* * *

Facebook's Mark Zuckerberg, Twitter's Jack Dorsey, and Google's Sundar Pichai will be virtually testifying Wednesday morning before a congressional committee about Section 230 of the Communications Decency Act that shields social media companies from liability over what content users post.

The hearing titled, "Does Section 230's Sweeping Immunity Enable Big Tech Bad Behavior?" - will begin at 10:00 a.m. A handful of Republican senators are expected to grill the three tech CEOs for their blatant anti-conservative bias.

The hearing comes six days before the presidential election, addresses the long-held legal protections for online speech - an immunity that critics in both political parties say enables these companies to abdicate their responsibility to moderate content, exposing their biases, which has become increasingly transparent in recent weeks as the Hunter Biden laptop stories are reported (and censored) on specific social media platforms. That has become an important topic among conservatives who feel tech companies are unfairly censoring them.

President Trump recently tweeted for an outright repeal of the law, using three exclamation points to emphasize his concern about the federal statute protecting social media companies as they unfairly censor conservatives.

Meanwhile, Attorney General Bill Barr and Republican legislators want to reform Section 230 by requiring social media platforms to become "politically neutral" in moderation of content.

Here are the opening statements of Zuckerberg, Dorsey, and Pichai:

Facebook's Mark Zuckerberg takes a more measured tone in his testimony (excerpt, emphasis ours):

...the debate about Section 230 shows that people of all political persuasions are unhappy with the status quo. People want to know that companies are taking responsibility for combatting harmful content—especially illegal activity—on their platforms. They want to know that when platforms remove content, they are doing so fairly and transparently. And they want to make sure that platforms are held accountable.

Section 230 made it possible for every major internet service to be built and ensured important values like free expression and openness were part of how platforms operate. Changing it is a significant decision. However, I believe Congress should update the law to make sure it's working as intended. We support the ideas around transparency and industry collaboration that are being discussed in some of the current bipartisan proposals, and I look forward to a meaningful dialogue about how we might update the law to deal with the problems we face today.

At Facebook, we don't think tech companies should be making so many decisions about these important issues alone. I believe we need a more active role for governments and regulators, which is why in March last year I called for regulation on harmful content, privacy, elections, and data portability. We stand ready to work with Congress on what regulation could look like in these areas. By updating the rules for the internet, we can preserve what's best about it—the freedom for people to express themselves and for entrepreneurs to build new things—while also protecting society from broader harms. I would encourage this Committee and other stakeholders to make sure that any changes do not have unintended consequences that stifle expression or impede innovation.

Twitter's Jack Dorsey is more aggressive, warning ironically that "Section 230 is the Internet's most important law for free speech and safety. Weakening Section 230 protections will remove critical speech from the Internet." - (excerpt, emphasis ours)

Twitter's purpose is to serve the public conversation. People from around the world come together on Twitter in an open and free exchange of ideas. We want to make sure conversations on Twitter are healthy and that people feel safe to express their points of view. We do our work recognizing that free speech and safety are interconnected and can sometimes be at odds. We must ensure that all voices can be heard, and we continue to make improvements to our service so that everyone feels safe participating in the public conversation—whether they are speaking or simply listening. The protections offered by Section 230 help us achieve this important objective.

As we consider developing new legislative frameworks, or committing to self-regulation models for content moderation, we should remember that Section 230 has enabled new companies—small ones seeded with an idea—to build and compete with established companies globally. Eroding the foundation of Section 230 could collapse how we communicate on the Internet, leaving only a small number of giant and well-funded technology companies.

We should also be mindful that undermining Section 230 will result in far more removal of online speech and impose severe limitations on our collective ability to address harmful content and protect people online. I do not think anyone in this room or the American people want less free speech or more abuse and harassment online. Instead, what I hear from people is that they want to be able to trust the services they are using.

I want to focus on solving the problem of how services like Twitter earn trust. And I also want to discuss how we ensure more choice in the market if we do not. During my testimony, I want to share our approach to earn trust with people who use Twitter. We believe these principles can be applied broadly to our industry and build upon the foundational framework of Section 230 for how to moderate content online. We seek to earn trust in four critical ways: (I) transparency. (2) fair processes. (3) empowering algorithmic choice, and (4) protecting the privacy of the people who use our service. My testimony today will explain our approach to these principles.

Google's Sundar Pichai warns in his prepared remarks that reforming Section 230 would have consequences for businesses and consumers.

As you think about how to shape policy in this important area, I would urge the Committee to be very thoughtful about any changes to Section 230 and to be very aware of the consequences those changes might have on businesses and consumers.

Watch Live: Does Section 230's Sweeping Immunity Enable Big Tech Bad Behavior?

No comments:

Post a Comment

Note: only a member of this blog may post a comment.